- Published on

How to run Stable Diffusion on Google Colab (AUTOMATIC1111)

- Authors

- Name

- F4AI

This is a step-by-step guide for using the Google Colab notebook in the Quick Start Guide to run AUTOMATIC1111. This is one of the easiest ways to use AUTOMATIC1111 because you don’t need to deal with the installation.

See installation instructions on Windows PC and Mac if you prefer to run locally.

This notebook is designed to share models in Google Drive with the following notebooks.

Google has blocked usage of Stable Diffusion with a free Colab account. You need a paid plan to use this notebook.

Table of Contents

- What is AUTOMATIC1111?

- What is Google Colab?

- Alternatives

- Step-by-step instructions to run the Colab notebook

- ngrok (Optional)

- When you are done

- Computing resources and compute units

- Models available

- Other models

- Installing models

- Installing extensions from URL

- Extra arguments to webui

- Version

- Secrets

- Extensions

- Frequently asked questions

- Next Step

What is AUTOMATIC1111?

Stable Diffusion is a machine-learning model. It is not very user-friendly by itself. You need to write codes to use it. Most users use a GUI (Graphical User Interface). Instead of writing codes, we write prompts in a text box and click buttons to generate images.

AUTOMATIC1111 is one of the first Stable Diffusion GUIs developed. It supports standard AI functions like text-to-image, image-to-image, upscaling, ControlNet, and even training models (although I won’t recommend it).

What is Google Colab?

Google Colab (Google Colaboratory) is an interactive computing service offered by Google. It is a Jupyter Notebook environment that allows you to execute code.

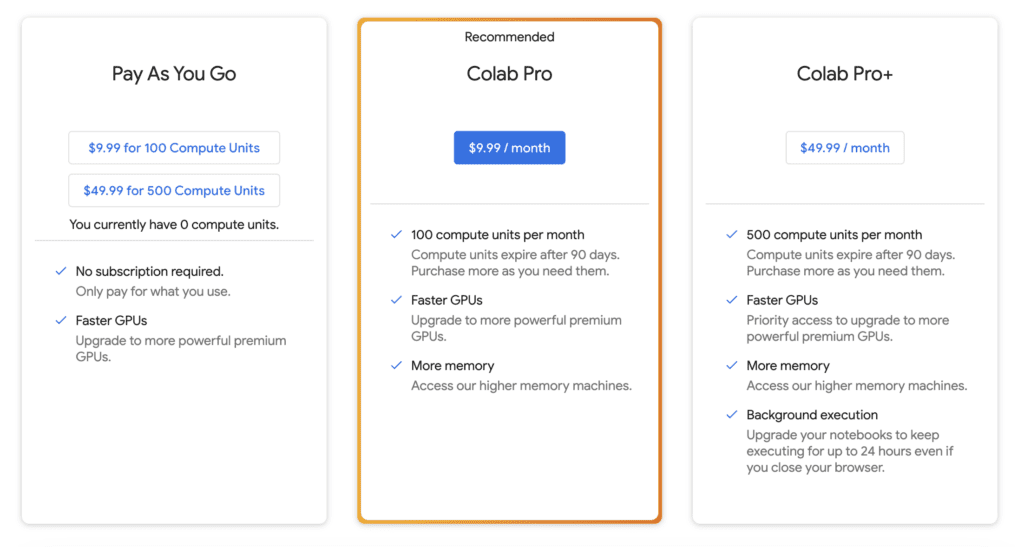

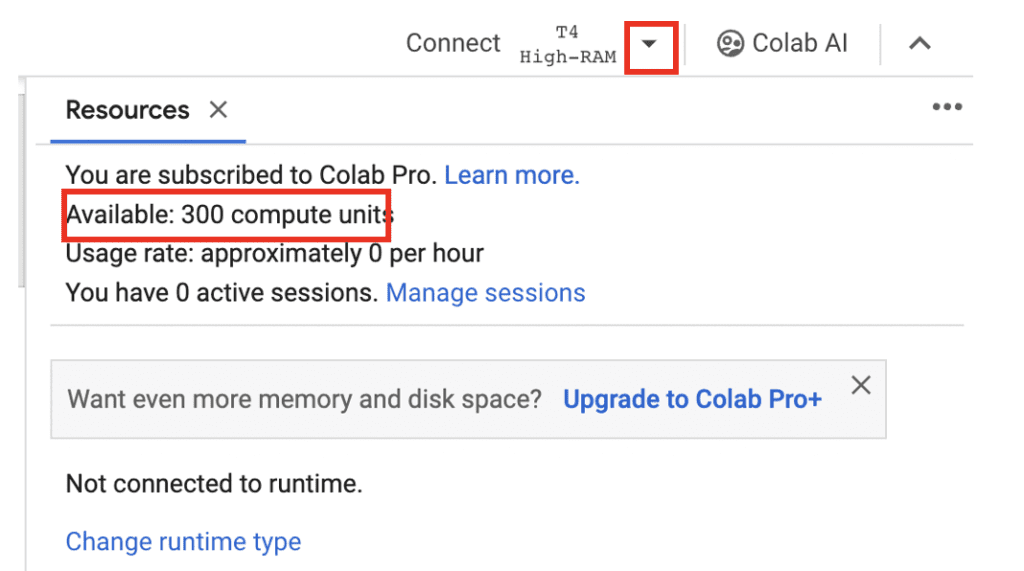

They have three paid plans – Pay As You Go, Colab Pro, and Colab Pro+. You need the Pro or Pro+ Plan to use all the models. I recommend using the Colab Pro plan. It gives you 100 compute units per month, which is about 50 hours per standard GPU. (It’s a steal)

With a paid plan, you have the option to use Premium GPU. It is an A100 processor. That comes in handy when you need to train Dreambooth models fast.

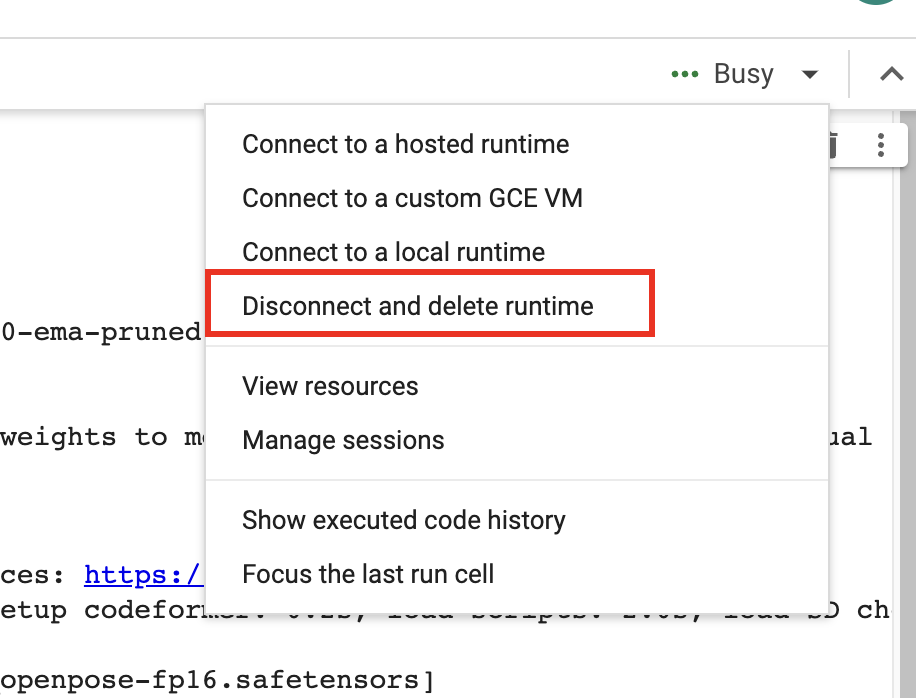

When you use Colab for AUTOMATIC1111, be sure to disconnect and shut down the notebook when you are done. It will consume compute units when the notebook is kept open.

You will need to sign up with one of the plans to use the Stable Diffusion Colab notebook. They have blocked the free usage of AUTOMATIC1111.

Alternatives

Think Diffusion provides fully managed AUTOMATIC1111/Forge/ComfyUI web service. They cost a bit more than Colab but save you from the trouble of installing models and extensions and faster startup time. They offer 20% extra credit to our readers. (Affiliate link)

Step-by-step instructions to run the Colab notebook

Step 0. Sign up for a Colab Pro or a Pro+ plans. (I use Colab Pro.)

Step 1. Open the Colab notebook in Quick Start Guide.

Become a member of this site to see this content

You should see the notebook with the second cell below.

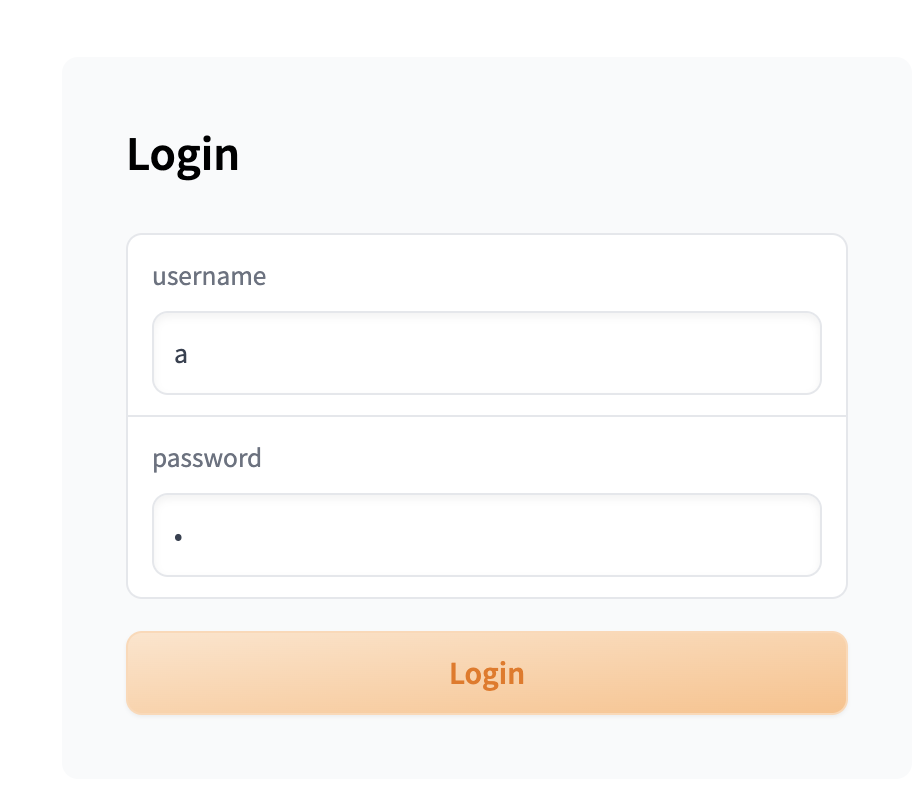

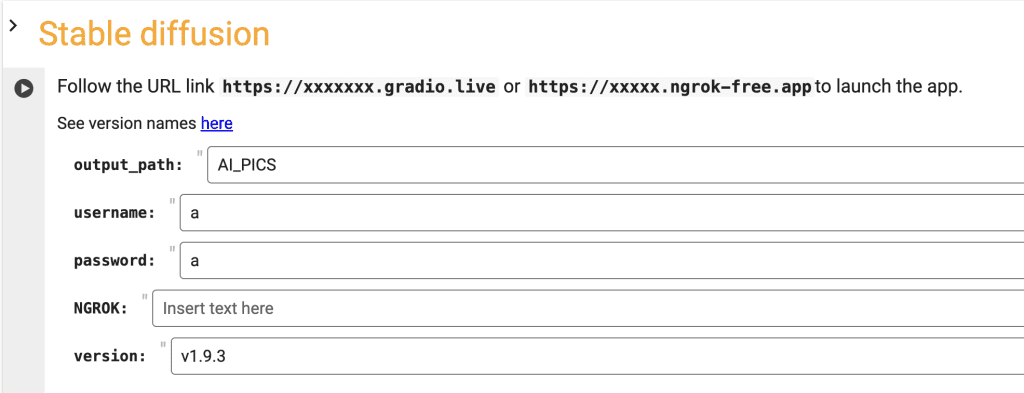

Step 2. Set the username and password. You will need to enter them before using AUTOMATIC11111.

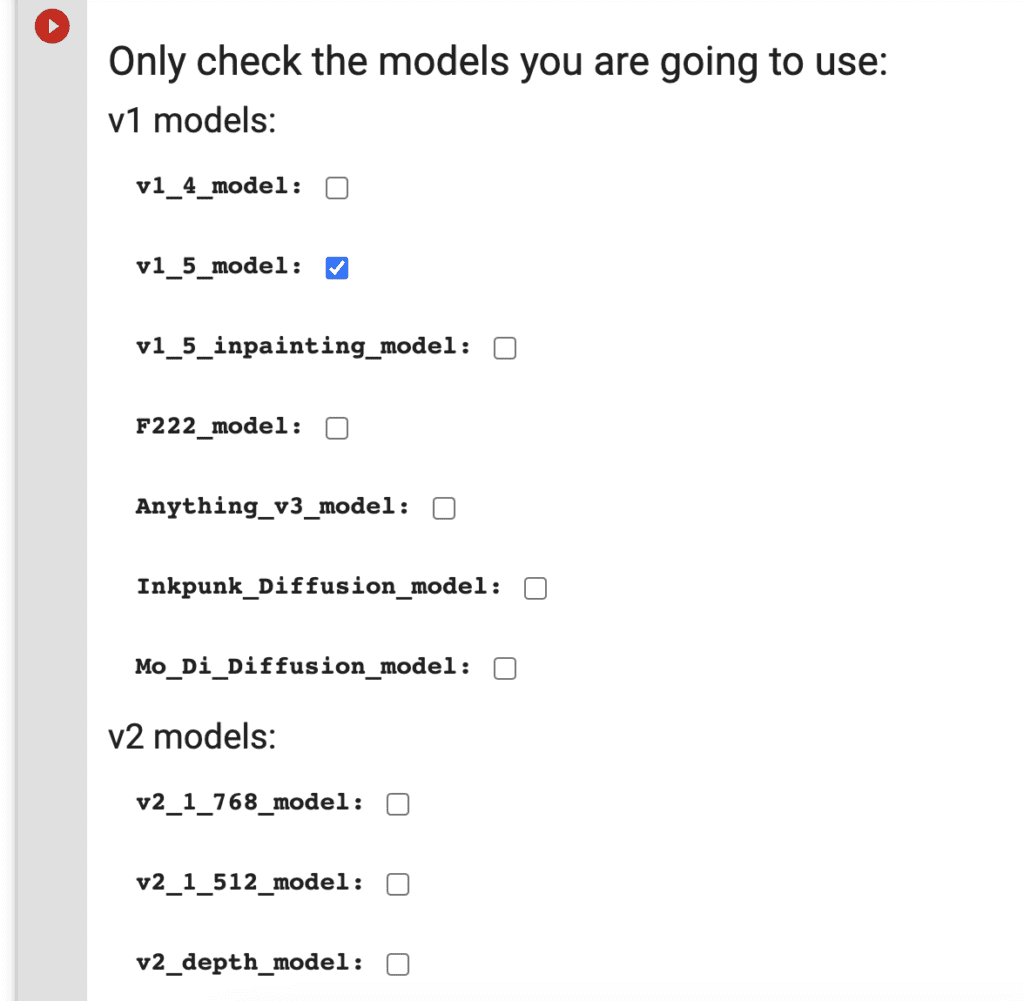

Step 3. Check the models you want to load. If you are a first-time user, you can use the default settings.

Step 4. Click the Play button on the left of the cell to start.

Step 5. It will install A1111 and models in the the Colab envirnoment.

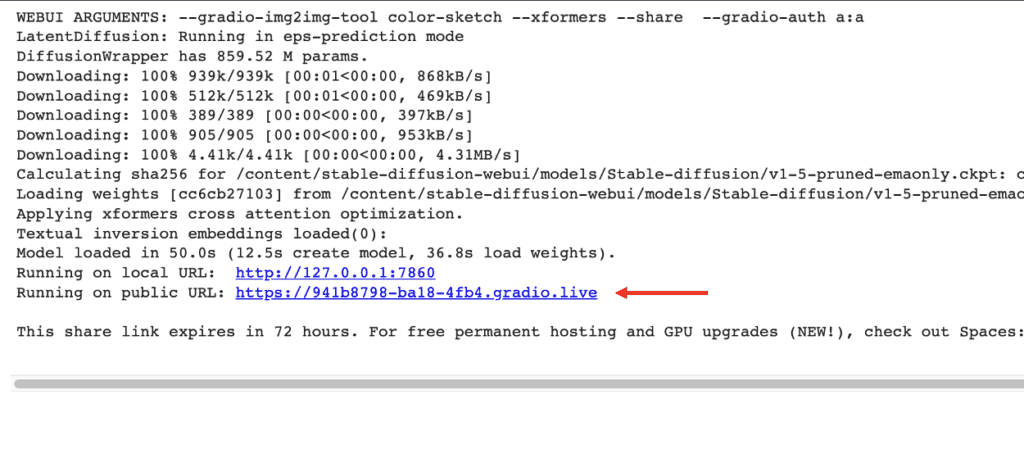

Step 6. Follow the gradio.live link to start AUTOMATIC1111.

Step 7. Enter the username and password you specified in the notebook.

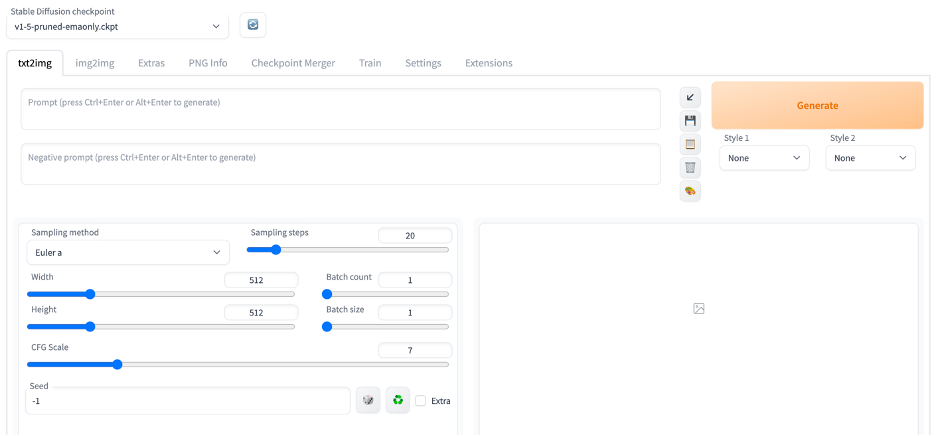

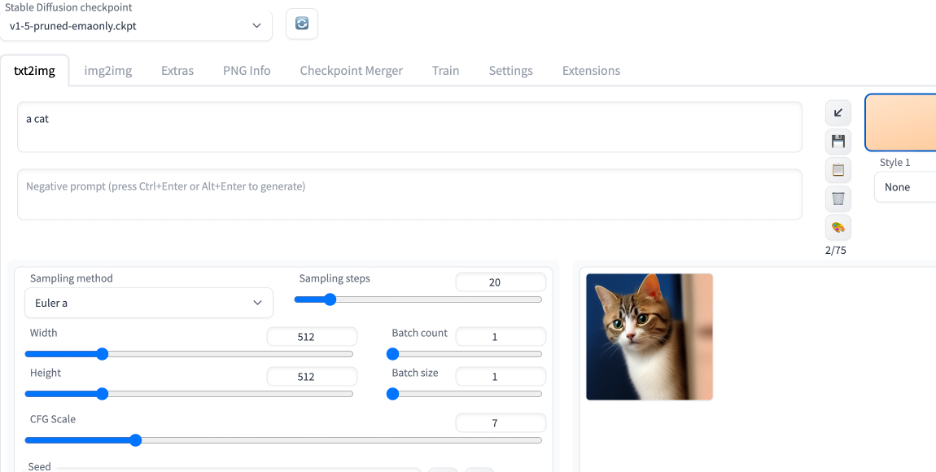

Step 8. You should see the AUTOMATIC1111 GUI after you log in.

Put in “a cat” in the prompt text box and press Generate to test using Stable Diffusion. You should see it generates an image of a cat.

ngrok (Optional)

If you run into display issues with the GUI, you can try using ngrok instead of Gradio to establish the public connection. It is a more stable alternative to the default gradio connection.

You will need to set up a free account and get an authoken.

- Go to https://ngrok.com/

- Create an account

- Verify email

- Copy the authoken from https://dashboard.ngrok.com/get-started/your-authtoken and paste in the ngrok field in the notebook.

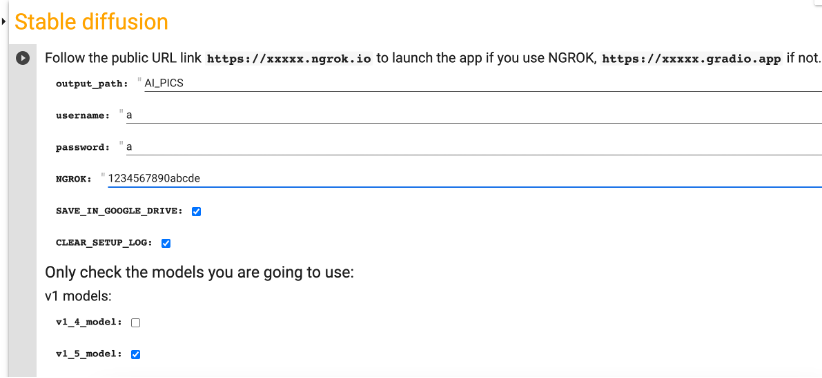

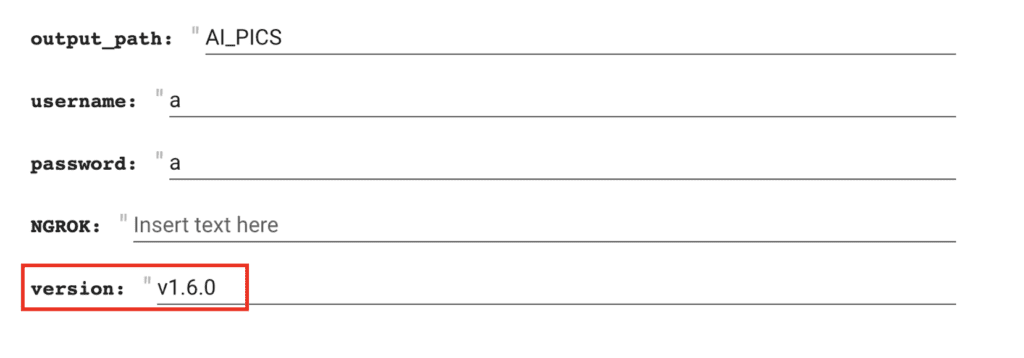

The Stable Diffusion cell in the notebook should look like below after you put in your ngrok authtoken.

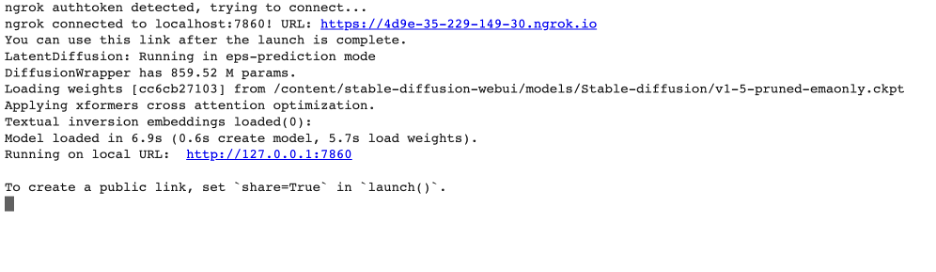

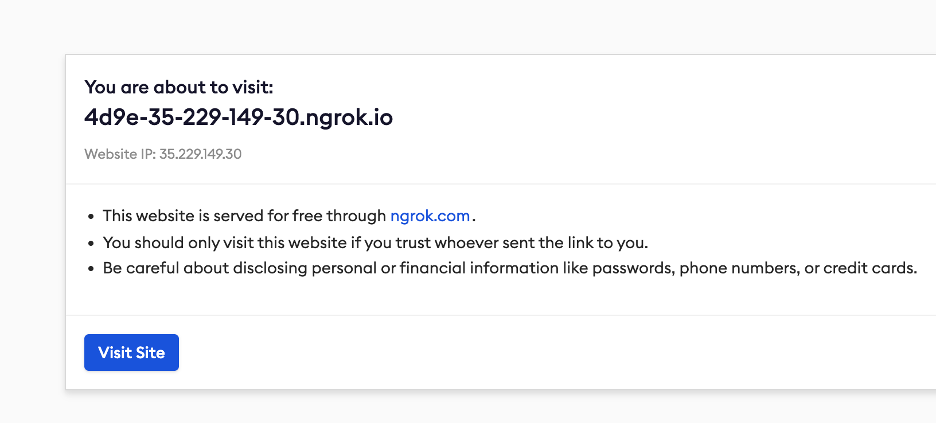

Click the play button on the left to start running. When it is done loading, you will see a link to ngrok.io in the output under the cell. Click the ngrok.io link to start AUTOMATIC1111. The first link in the example output below is the ngrok.io link.

When you visit the ngrok link, it should show a message like below

Click on Visit Site to Start AUOTMATIC1111 GUI. Occasionally, you will see a warning message that the site is unsafe to visit. It is likely because someone used the same ngrok link to put up something malicious. Since you are the one who created this link, you can ignore the safety warning and proceed.

When you are done

When you finish using the notebook, don’t forget to click “Disconnect and delete runtime” in the top right drop-down menu. Otherwise, you will continue to consume compute credits.

Computing resources and compute units

To view computing resources and credits, click the downward caret next to the runtime type (E.g. T4, High RAM) on the top right. You will see the remaining compute units and usage rate.

Models available

For your convenience, the notebook has options to load some popular models. You will find a brief description of them in this section.

v1.5 models

v1.5 model

v1.5 model is released after 1.4. It is the last v1 model. Images from this model is very similar to v1.4. You can treat the v1.5 model as the default v1 base model.

v1.5 inpainting model

The official v1.5 model trained for inpainting.

Realistic Vision

Realistic Vision v2 is good for generating anything realistic, whether they are people, objects, or scenes.

F222

F222 is good at generating photo-realistic images. It is good at generating females with correct anatomy.

Caution: F222 is prone to generating explicit images. Suppress explicit images with a prompt “dress” or a negative prompt “nude”.

Dreamshaper

Dreamshaper is easy to use and good at generating a popular photorealistic illustration style. It is an easy way to “cheat” and get good images without a good prompt!

Open Journey Model

Open Journey is a model fine-tuned with images generated by Mid Journey v4. It has a different aesthetic and is a good general-purpose model.

Triggering keyword: mdjrny-v4 style

Anything v3

Anything V3 is a special-purpose model trained to produce high-quality anime-style images. You can use danbooru tags (like 1girl, white hair) in the text prompt.

It’s useful for casting celebrities to amine style, which can then be blended seamlessly with illustrative elements.

Inkpunk Diffusion

Inkpunk Diffusion is a Dreambooth-trained model with a very distinct illustration style.

Use keyword: nvinkpunk

v2 models

v2 models are the newest base models released by Stability AI. It is generally harder to use and is not recommended for beginners.

v2.1 768 model

The v2.1-768 model is the latest high-resolution v2 model. The native resolution is 768×768 pixels. Make sure to set at least one side of the image to 768 pixels. It is imperative to use negative prompts in v2 models.

You will need Colab Pro to use this model because it needs a high RAM instance.

v2 depth model

v2 depth model extracts depth information from an input image and uses it to guide image generation. See the tutorial on depth-to-image.

SDXL model

This Coalb notebook supports SDXL 1.0 base and refiner models.

Select SDXL_1 to load the SDXL 1.0 model.

Important: Don’t use VAE from v1 models. Go to Settings > Stable Diffusion. Set SD VAE to AUTOMATIC or None.

Check out some SDXL prompts to get started.

Other models

Here are some models that you may be interested in.

See more realistic models here.

Dreamlike Photoreal

Dreamlike Photoreal Model Page

Model download URL

https://huggingface.co/dreamlike-art/dreamlike-photoreal-2.0/resolve/main/dreamlike-photoreal-2.0.safetensors

Dreamlike Photoreal model is good at generating beautiful females with correct anatomy. It is similar to F222.

triggering keyword: photo

Caution: This model is prone to generating explicit photos. Suppress explicit images with a prompt “dress” or a negative prompt “nude”.

Lyriel

Lyriel excels in artistic style and is good at rendering a variety of subjects, ranging from portraits to objects.

Model download URL:

https://civitai.com/api/download/models/50127

Deliberate v2

Deliberate v2 is a well-trained model capable of generating photorealistic illustrations, anime, and more.

Installing models

There are two ways to install models that are not on the model selection list.

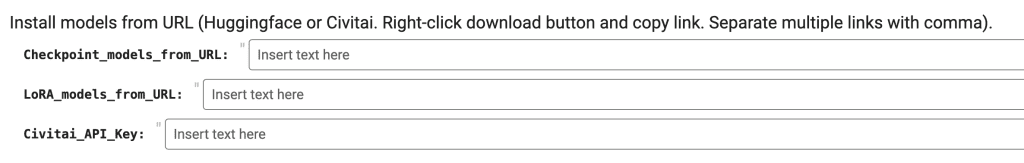

- Use the Checkpoint_models_from_URL and Lora_models_from_URL fields.

- Put model files in your Google Drive.

Install models using URLs

You can only install checkpoint or LoRA models using this method.

Put in the download URL links in the field. The link you initiate the file download when you visit it in your browser.

- Checkpoint_models_from_URL: Use this field for checkpoint models.

- Lora_models_from_URL: Use this field for LoRA models.

Some models on CivitAi needs an API key to download. Go to the account page on CivitAI to create a key and put it in Civitai_API_Key.

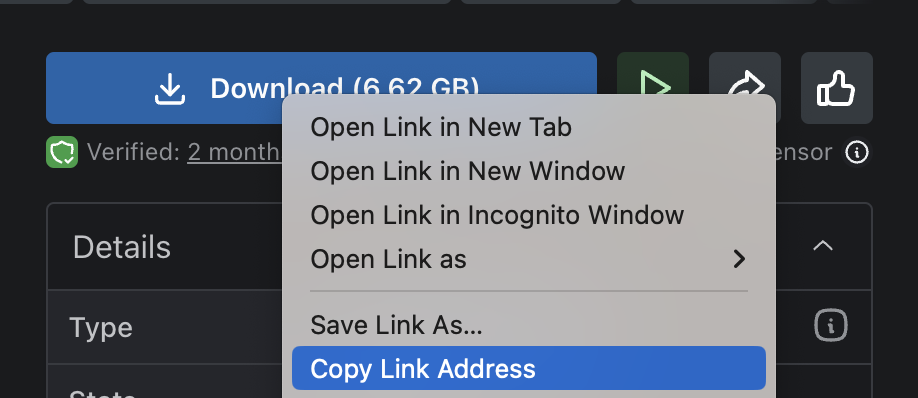

Below is an example of getting the download link on CivitAI.

Remove everything after the first question mark (?).

For example, change https://civitai.com/api/download/models/993999?type=Model&format=SafeTensor to https://civitai.com/api/download/models/993999.

Put it in the Model_from_URL field.

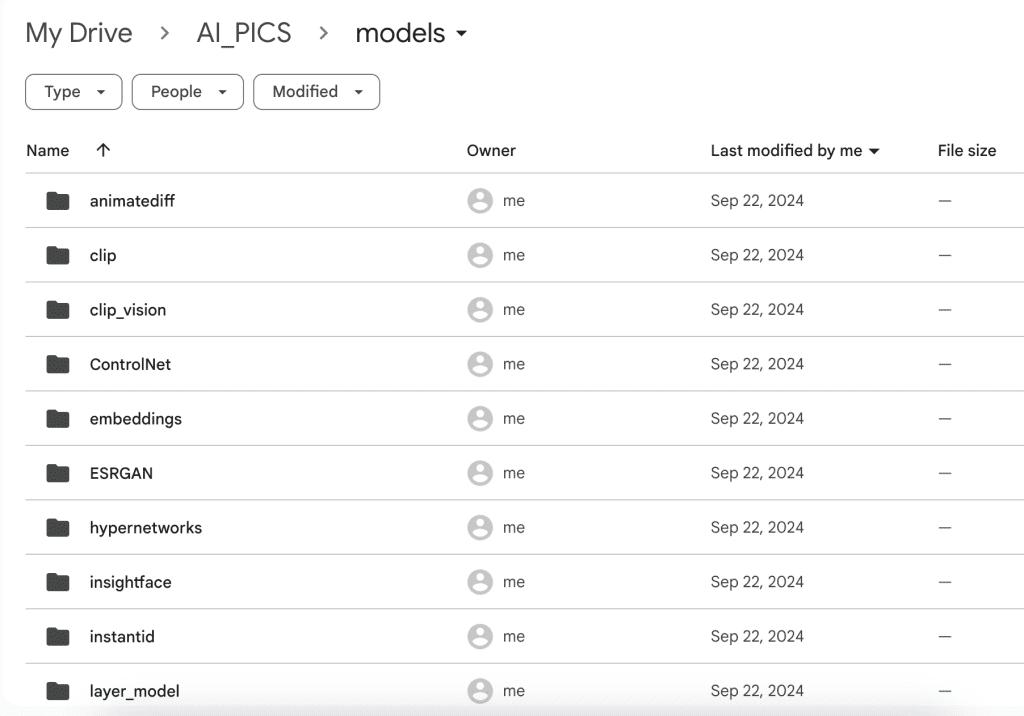

Installing models in Google Drive

After running the notebook for the first time, you should see the folder AI_PICS > models created in your Google Drive. The folder structure inside this folder mirror AUTOMATIC1111‘s and is designed to share models with other notebooks from this site.

Put your model files in the corresponding folder. For example,

- Put checkpoint model files in AI_PICS > models > Stable-diffusion.

- Put LoRA model files in AI_PICS > models > Lora.

You will need to restart the notebook to see the new models.

Installing extensions from URL

This field can be used to install any number of extensions. To do so, you will need the URL of the extension’s Github page.

For example, put in the following if you want to install the Civitai model extension.

https://github.com/civitai/sd_civitai_extension

You can also install multiple extensions. The URLs need to be separated with commas. For example, the following URLs install the Civitai and the multi-diffusion extensions.

https://github.com/civitai/sd_civitai_extension,https://github.com/pkuliyi2015/multidiffusion-upscaler-for-automatic1111

Extra arguments to webui

You can add extra arguments to the Web-UI by using the Extra_arguments field.

For example, if you use the lycoris extension, it is handy to use the extra webui argument --lyco-dir to specify a custom lycoris model directory in your Google Drive.

Other useful arguments are

- --api. Allow API access. Useful for some applications, e.g. the PhotoShop Automatic1111 plugin.

Version

Now you can specify the version of Stable Diffusion WebUI you want to load. Use this at your own risk, as I only test the version saved.

Notes on some versions

- v1.6.0: You need to add --disable-model-loading-ram-optimization in the Extra_arguments field.

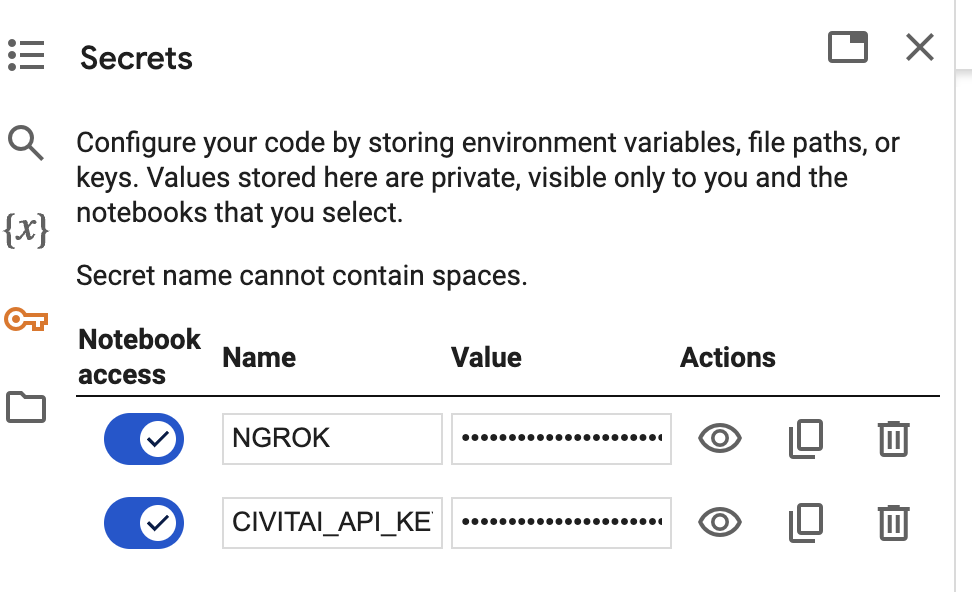

Secrets

This notebook supports storing API keys in addition to Secrets. If the keys were defined in secrets, the notebook would always use them. The notebook currently supports these two API keys (All upper cases):

- NGROK: Ngrok API key.

- CIVITAI_API_KEY: API key for CivitAI.

You will need to enable Notebook access for each key like above.

Extensions

ControlNet

ControlNet is a Stable Diffusion extension that can copy the composition and pose of the input image and more. ControlNet has taken the Stable Diffusion community by storm because there is so much you can do with it. Here are some examples

This notebook supports ControlNet. See the tutorial article.

You can put your custom ControlNet models in AI_PICS/ControlNet folder.

Deforum – Making Videos using Stable Diffusion

You can make videos with text prompts using the Deforum extension. See this tutorial for a walkthrough.

Regional Prompter

Regional prompter lets you use different prompts for different regions of the image. It is a valuable extension for controlling the composition and placement of objects.

After Detailer

Oriignal |  With After Detailer enabled. |

After Detailer (!adetailer) extension fixes faces and hands automatically when you generate images.

Openpose editor

Openpose editor is an extension that lets you edit the openpose control image. It is useful for manipulating the pose of an image generation with ControlNet. It is used with ControlNet.

AnimateDiff

AnimateDiff lets you create short videos from a text prompt. You can use any Stable Diffusion model and LoRA. Follow this tutorial to learn how to use it.

text2video

Text2video lets you create short videos from a text prompt using a model called Modelscope. Follow this tutorial to learn how to use it.

Infinite Image Browser

The Infinite Image Browser extension lets you manage your generations right in the A1111 interface. The secret key is SDA.

Frequently asked questions

Do I need a paid Colab account to use the notebook?

Yes, you need a Colab Pro or Pro+ account to use this notebook. Google has blocked the free usage of Stable Diffusion.

Is there any alternative to Google Colab?

Yes, Think Diffusion provides fully managed AUTOMATIC1111/Forge/ WebUI online as a web service. They offer 20% extra credit to our readers. (Affiliate link)

Do I need to use ngrok?

You don’t need to use ngrok to use the Colab notebook. In my experience, ngrok provides a more stable connection between your browser and the GUI. If you experience issues like buttons not responding, you should try ngrok.

What is the password for the Infinite Image Browser?

SDA

Why do I keep getting disconnected?

Two possible reasons:

- There’s a human verification shortly after starting each Colab notebook session. You will get disconnected if you do not respond to it. Make sure to switch back to the Colab notebook and check for verification.

- You are using a free account. Google has blocked A1111 in Colab. Get Colab Pro.

Can I use the dreambooth models I trained?

Yes, put the model file in the corresponding folder in Google Drive.

- Checkpoint models: AI_PICS > models > Stable-diffusion.

- LoRA models: AI_PICS > models > Lora.

How to enable API?

You can use AUMATIC1111 as an API server. Add the following to Extra Web-UI arguments.

--api

The server’s URL is the same as the one you access the Web-UI. (i.e. the gradio or ngrok link)

Why do my SDXL images look garbled?

Check to make sure you are not using a VAE from v1 models. Check Settings > Stable Diffusion > SD VAE. Set it to None or Automatic.

Next Step

If you are new to Stable Diffusion, check out the Absolute beginner’s guide.